The Good, the Bad and the Playful Fake

Some weeks ago a friend introduced me to TikTok, a content delivery app that consists of streaming short-form videos of a mere 5–15 seconds. Like other photo/video apps, it has an extensive set of filters that enable all kinds of manipulation. Fun and exciting, it uses the right tools to lure you into the TikTok world. Interestingly, unlike other social platforms, TikTok is completely driven towards AI development. It takes a few minutes of watching some random videos for the system to learn all your preferences. It rapidly begins to show videos by random users that are hard to ignore or swipe away out of disinterest. The more it is used, the better it gets. In the absence of any human intervention, authorship or guidance, how much faith do I have in a fully computer-controlled system that knows my tastes better than I do? Clearly the movement of and calculation through data implies new power relations and economic values and questions the validity of knowledge, but do they also offer new forms of agency, experiences or control?

National Geographic’s fake pyramids

An early example of image manipulation is the 1982 cover of National Geographic magazine. For this issue National Geographic manipulated a photo of the Pyramids of Giza in Egypt taken by Gordon Gahan. Using high-end digital photographic equipment, the magazine’s photo editor slightly altered the horizontal image to fit the vertical cover by placing the two pyramids closer together. Gahan complained when he saw the result – even though he himself had paid the camel drivers to walk back and forth in front of the pyramids to capture the right scene – and a long discussion among photo professionals followed about whether this was a falsification or a modification. The question of course is: what is reality and who decides? Judging from a 2016 article by editor-in-chief Susan Goldberg, National Geographic still seems to feel uncomfortable. Explaining how the magazine keeps “photography honest in the era of Photoshop”, Goldberg begins the article with the apparently traumatic pyramids image explaining, “why they’ll never move the pyramids again”.

National Geographic, Vol. 161, No. 2, February 1982

he discussion about what is fake and what is real intensified around Donald Trump’s presidential campaign in 2015–16. Trump began using the term fake for any news that didn’t appeal to him, i.e., almost everything that put him in a slightly less favourable light. Around the same time websites popped up presenting and exposing fake news stories. A mere three years later, when doing a quick search for the “latest fake news”, I learned that newspapers dedicate parts of their websites to informing readers about the latest fake news: from The Independent to Wired and the BBC. The Dutch government tops the list with an advertisement and the slogan “Recognise fake news. Watch the checklist. Is this real news?” Slowly browsing through the different sites, I indeed recognise some things as fake, particularly articles that overstate the message or use imagery that is badly photoshopped. Yet there are also many good writers and photoshoppers who can construct a canny and convincing (visual) narrative. Browsing the news sites, a strange feeling of unease creeps in; many years ago I studied image cultures, I’ve co-directed the Centre for the Study of Networked Images at London South Bank University since 2015, and sometimes even I find it hard to spot the differences.

In 1710, philosopher George Berkeley asked the question: “If a tree falls in a forest and no one is around to hear it, does it make a sound?” More recently, Lawrence Lessing is said to have remarked that if a tree falls in a deserted forest and nobody is around to tweet it then it didn’t really fall. The question of what is fake or real is perhaps not particularly relevant. In the end any fact is always subjective. What is more important is to understand the reason why and the context in which things happen. Facts and fictions don’t usually happen in isolation; they are embedded in a context and it is up to the receivers to find out more about that context and make up their own minds. So, rather than discussing what is real and not-real, it is more urgent to learn about and to imagine the tree that fell in the desolate forest: the one we might not see or recognise, not necessarily to make it public, but to observe and perceive in order to understand what happened.

From manipulation to deepfake

Around the end of 2017 the term deepfake emerged in the Reddit community, signalling a trend in which users shared videos, mostly involving celebrities, and swapped people’s faces onto the bodies of actors/actresses in porn videos. While remixing and appropriation are nothing new in popular culture and art, the technology that is available now makes it increasingly easier to create remixes. Deepfake videos are created using machine learning algorithms, which train their skill on selected images. After learning enough about the specifics of the images, the algorithm can construct its own compositions. Most of these tools are available as open source and claim to be “easy to use” for even a low-level programmer. Even though the results may show strange tracking effects or other glitches, the ability to easily exchange faces and bodies quickly went viral. While some discuss the ethics and privacy issues – probably rightfully so – one of the “deepfakers” made an interesting remark: “It’s not a bad thing for more average people [to] engage in machine learning research.” In February 2018 deepfakes were banned from Reddit for spreading involuntary porn, and some other platforms followed, yet the distribution qualities of the Web had done their work and many ways to download and (re)create remain available. To help the victims in the videos, in 2019 the US announced the Accountability Act, which is supposed to defend each and every person from false appearances. And a few days before writing this article a deepfake app, DeepNude, was taken down a few hours after being launched and exposed. It seems we’re learning, or are we?

From fake to playful imagination

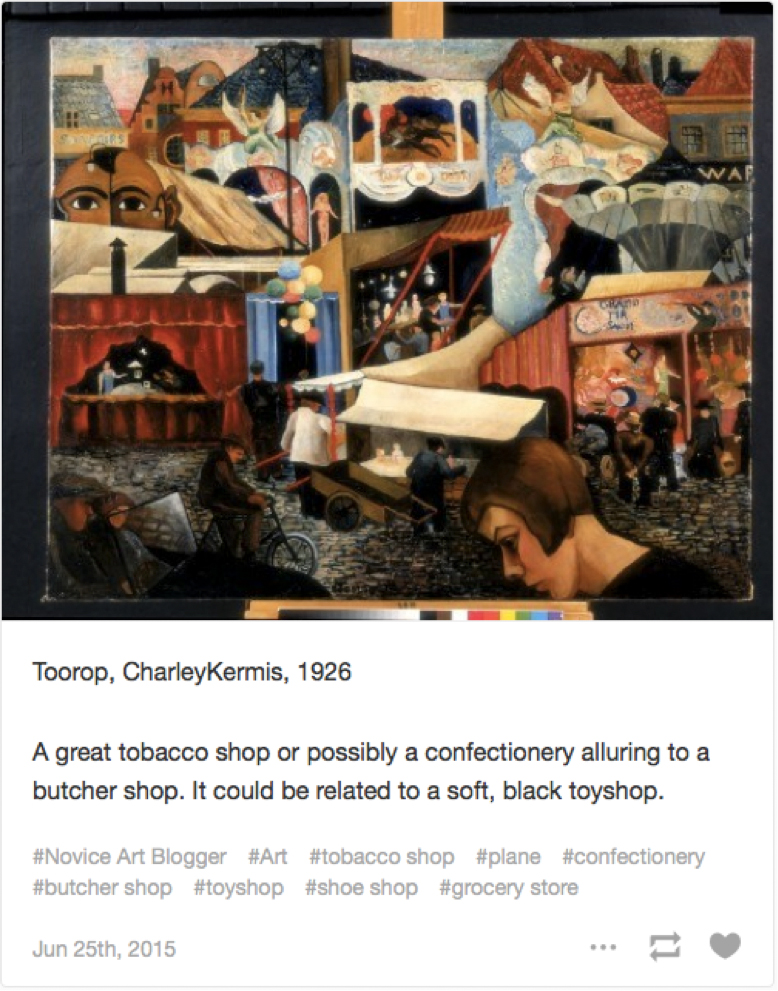

Despite the quick dismantling and legislation, deepfake has a much longer history – just think of appropriation art and remixing. Many artists for many years have manipulated images, texts, sounds and videos. Some of these are merely aesthetic outcomes, for example, Mario Klingemann’s investigations into the creative potential of machine learning and AI, which he termed neurography, in which he trains algorithms to create new images based on the learning of large sets of existing images (his work Uncanny Mirror is also part of the exhibition at HeK). Or fun experiments such as Matthew Plummer Fernandez’s Novice Art Blogger, a software tool that mimics art-speak by providing new captions for abstract artworks based on the outcomes of an image-classification algorithm.

Matthew Plummer Fernandez, Novice Art Blogger (2015)

Others are political, such as tactical media art, a “movement” that was particularly active in the 1990s with its media acts, or interventions, in the form of a hack, a prank or a hoax. The earlier experiments were often done on the fly, following the “quick-and-dirty” approach of the camcorder revolution, but later examples show the elaborate efforts used to provoke the intended audience. For example, the recent Mark Zuckerberg video in which Zuckerberg explains the power of Facebook, posted on Instagram by its creators Bill Posters and Daniel Howe in collaboration with advertising company Canny, or The Yes Men’s recent campaign on 16 January 2019. In response to Trump’s presidency they made an unauthorised special edition of the Washington Post with the headline “Unpresidented. Trump Hastily Departs White House, Ending Crisis”, dating it 1 May 2019. More than a prank the project is a vision, a playful imaginary, surpassing the fake by claiming the future. Interestingly, the fake Washington Post was an analogue attempt to create confusion, but the editorial depth and the meticulous detailing in the articles, the made-up historical references and the experts cited created a desire and a belief that led to the hope that change is possible. In the end, perhaps not all fake is bad.

Embracing the fake by deep-understanding

What all the above examples have in common is that they are rapidly exposed, some sooner than others, but in general the fake is swiftly dismantled by users, readers and viewers who are looking beyond the initial information they receive. In these cases audiences are not merely naive or gullible spectators, but are investigators who delve into the context around the “news” to find other types of meaning. The fakeness becomes transparent and it’s clear that its algorithms function in larger ideological infrastructures, and the context around the “fact” thus creates a more interesting discussion – whether it is about Trump’s presidency, Zuckerberg’s (dis)honesty, the effects of porn on women or the ridiculing of art-speak. But there is another place where deep learning takes place which at first sight is less obvious, seems less harmful and certainly less political. These practices take place around issues that are mundane or unimportant to most people. I’m referring to the use of image manipulation and particularly the artificial generation of images in brochures ranging from IKEA catalogues (in 2014 75% of the images were artificially rendered) to architectural visions of homes and future cities’ infrastructures. Known as CGI, computer-generated imagery, these applications build non-existing spaces, objects and people. At times mesmerising, there is also an uncanny feeling that these places may exist somewhere in a far corner of the world. What these examples demonstrate is the ease of acceptance of deep learning through the mundane, where its consequences are hardly noticed. CGI seeps into our lives, and the fake is uncritically accepted and normalised. We live in a moment where the absurdity of fake and real coalesce and where formalisms interweave into the mundane to release and congeal further dynamics. While our impulse is to fight for real instead of fake, perhaps rather than resisting we should accept and understand the deepfake for what it is – the better fake that is neither true nor false. Then we will be able to confront or play with it on its own terms. By embracing the entanglement of human and machine, which requires a deep understanding of the inner workings, new kinds of knowledge-power may come about that both challenge and extend the binary between fake and real.

Annet Dekker [http://aaaan.net] is an independent researcher/curator. Currently she is Assistant Professor Media Studies: Archival and Information Studies at the University of Amsterdam and Visiting Professor and co-director of the Centre for the Study of the Networked Image at London South Bank University. She publishes widely on issues of digital art, curation and preservation in international, peer-reviewed journals, books and magazines, and has edited several publications, most recently, Collecting and Preserving Net Art. Moving Beyond Conventional Methods (2018) and Lost and Living in Archives. Collectively Shaping New Memories (2017).