AI, Can You See Me?

I first discovered machine learning during a week-long workshop with New York–based programmer and artist Gene Kogan at ECAL. The start of the week proved complicated for me, as I could not test all the examples from ml4a (Machine Learning for Artists; one of the many tools artists have to use machine learning programs). The reason for this was that the facial detection algorithms were struggling to detect me, and the reason they were struggling to detect me is because I’m black.

As one can imagine, I felt frustrated. Despite moving, gesturing and changing places, getting the program to see me was quite an effort. I had to find the perfect position. After testing different machine learning tools such as YOLO Darknet and ml5 (Machine Learning 5), I kept getting the same result: it was easier for the machine to recognise the people behind or around me than to recognise me.

The Human Detector

So during the workshop, I decided to create the Human Detector. The Human Detector is an experience that invites the user to do everything in order to avoid being recognised by AI. By doing this, I recreated my own experience with ml4a and AI, but I inverted the rules so that I win every time. The aim of the experience is for the user to press a button without being detected as a human being by a camera connected to a detection system. The user must be as creative as I had to be in order to succeed.

The detection system uses PoseNet, a computer vision tool based on machine learning which provides real-time estimation of human poses. It detects not only a face but also a shoulder, elbow or other body parts. When I tried PoseNet, I realised that it succeeded in perceiving me even in the darkest trials. So my plan did not work – I had to be just as creative as other users to succeed at my own game. But I asked myself: why can PoseNet recognise me as a human being while ml4a’s face tracker can’t?

Computer vision and black people

As I continued my research, I came across a lot of pictures where facial detection failed to recognise black people. Why is this so common? Why does computer vision have trouble seeing black people?

Computer vision uses automatic learning techniques to detect faces. These techniques require a training set, which consists of material from which the program learns how to process information. In the case of computer vision, the training set is often composed of a large number of facial images. So computer vision’s ability to recognise different types of faces depends on the diversity of the training set. This means that computer vision can only reliably recognise what it’s been trained to see.

I understood that having an inclusive training set allows you to have inclusive results. So I asked myself: Is there really a lack of diversity in training set data? If so, is this an unconscious choice on the part of developers or is it deliberate? What access do we have to diversity in data? And especially, how can we find out this information?

Highlight biases

To better understand the issue, I started looking at the work of Joy Buolamwini, a researcher at MIT. In one exercise, she had to communicate with a robot but the robot could not recognise her, so she decided to put on a white mask so she could be detected. Joy calls this problem the “coded gaze” but we can also talk about algorithmic bias. I was particularly interested in her Gender Shades project and how it evaluates the accuracy of AI-powered gender classification products. The results show that the software misidentified darker-skinned women far more frequently than lighter-skinned men.

When I tried to create other projects about algorithmic bias, I faced an obstacle: the lack of transparency in the algorithms and training sets did not allow me to fully experiment with the programs or understand where the bias came from or how it came to be. The solution I found was to highlight the biases of these algorithms as well as those of our society. The second project I initiated was Face Access: a fictional project where you can pretend to access some institutions with a picture of your face.

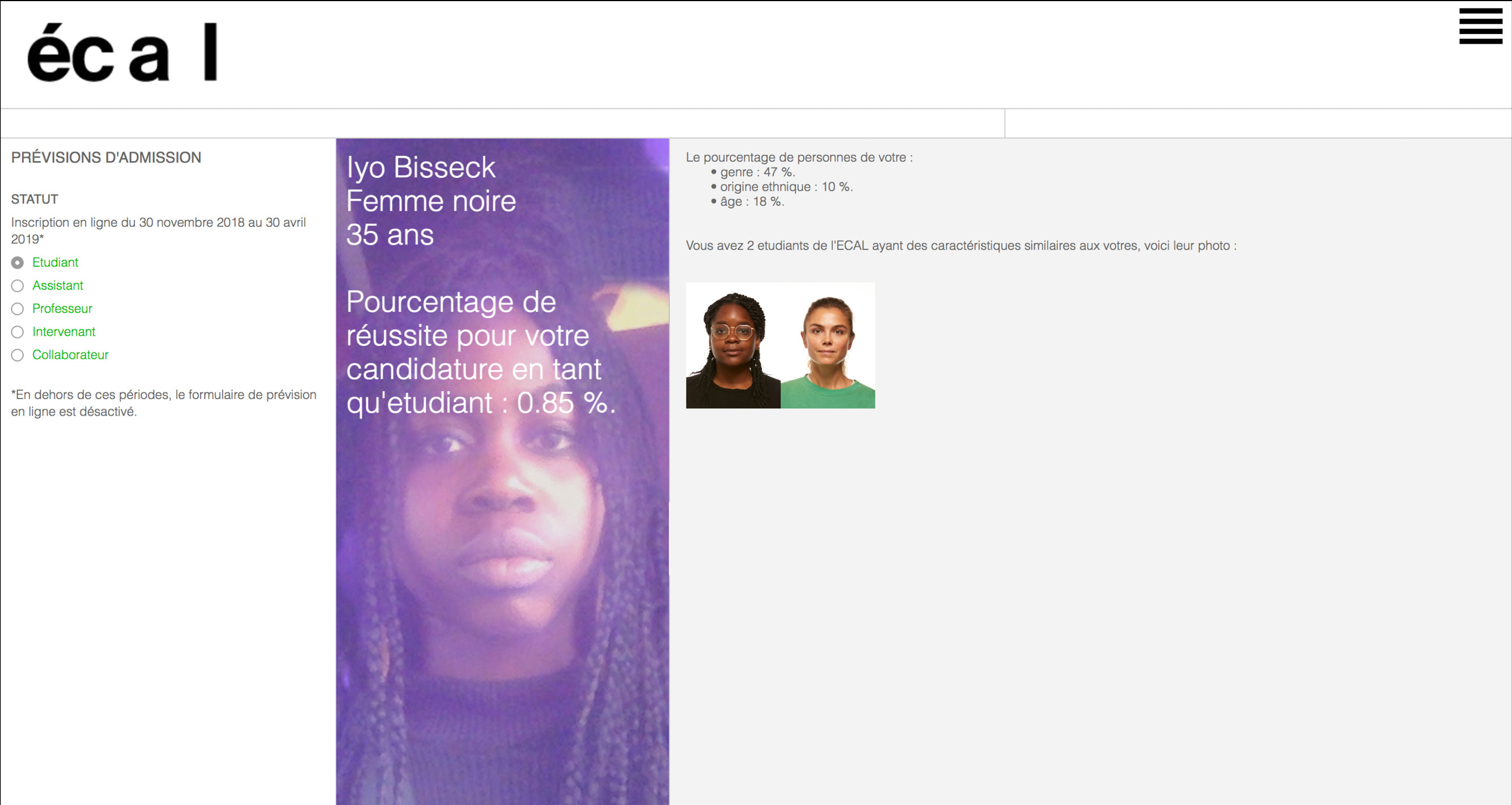

For my training set, I took data from an open photobase from schools in Lausanne, including Manufacture, EPFL and ECAL. After analysing them with the Face++ tools, I created a platform which can tell you your chances of success if you apply for the desired status: student, assistant or teacher. To contextualise the result, you are also shown the statistics and photos of people in the database who are assumed to have the same physical profile (age, gender, ethnicity). As a user you should then be able to observe the dynamics at play in our society and consider questions such as: Can your result be explained by algorithmic bias or by social bias? Or both?

FaceAccess (screenshot)

Fight for transparency

Joy Buolamwini also made similar observations and created the Algorithmic Justice League, a collective that aims to unmask bias and allow more transparency in algorithms by creating projects like The Safe Face Pledge.The Safe Face Pledge “gives opportunities for organisations to make public commitments towards mitigating the abuse of facial analysis technology by addressing harmful bias, facilitating transparency and embedding commitments into business practices.” Cathy O’Neil, a data scientist who wrote a book called Weapons of Math Destruction, talks about the danger of opaque, unregulated and uncontestable mathematical models and explains that algorithms, with the training set we give them, create their own reality. Transparency helps us to understand what underpins these realities and to know exactly what algorithmic bias lies behind them. This is why it is important to ask not only “AI, can you see me?”, but also “AI, can I see you?”.

References:

Cathy O’Neil – Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy (2016; New York, NY: Crown)

Further reading:

https://pair-code.github.io/what-if-tool/

https://www.media.mit.edu/posts/how-i-m-fighting-bias-in-algorithms/

https://www.bloomberg.com/opinion/articles/2018-10-16/amazon-s-gender-biased-algorithm-is-not-alone

https://ai.google/research/teams/brain/pair

https://www.thejustdatalab.com/

Iyo Bisseck is an interaction designer working at the crossroads of different fields such as art, social sciences, cognitive sciences and computer science. She is particularly interested in inclusive and activist projects.

https://iyo.io/